人类是否应完全相信ChatGPT?听听ChatGPT怎么说

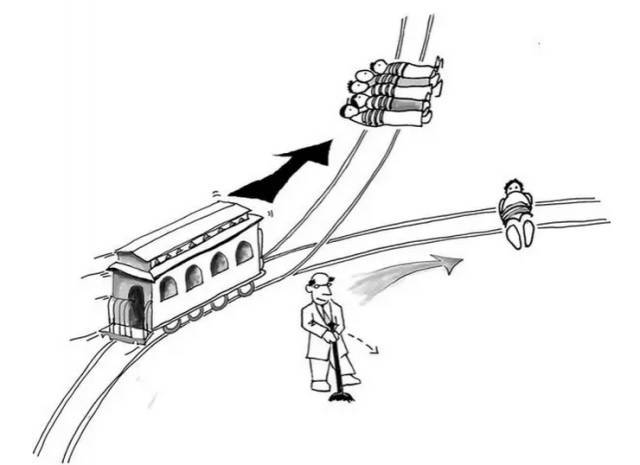

自从ChatGPT火出圈后,大量关于使用ChatGPT的担心、倡议和禁令被提了出来。这些担心、提议和禁令主要集中在ChatGPT给出的信息是否完全可信、盲目相信ChatGPT会否产生道德问题、进一步训练大模型最终是否会令AI反噬人类、以及涉及国家安全的信息泄露风险。这些争论的焦点将人类分成了多个阵营,互相争论不休,且远没有要达成一致的迹象。

我们之前有文章提到过盲目相信ChatGPT会引发道德问题(详见”ChatGPT能影响人类的道德判断?“),关于这一点,我们不妨来看看ChatGPT自己是如何说的。

作为人工智能,我被编程为尽我所能提供信息和回答问题。然而,人们是否应该盲目相信 ChatGPT 所说的任何内容的问题引发了道德问题。 首先,承认人工智能的局限性很重要。虽然我是一个非常先进的语言模型,但我仍然是一台机器,缺乏以人类的方式理解上下文和细微差别的能力。这意味着如果人们在没有任何批判性思考或事实核查的情况下完全依赖我的回答,则存在错误信息或误解的风险。 此外,在创建像我这样的 AI 时,数据和编程存在偏差风险。如果用来训练我的数据有偏见,就会导致有偏见的反应。例如,如果我接受了包含种族或性别刻板印象的数据的培训,可能会导致对这些主题有偏见的回应。因此,重要的是人们要意识到这些偏见,并在解释我的回答时将它们考虑在内。 另一个伦理问题是人工智能生成的内容可能被用于恶意目的,例如传播虚假信息或宣传。随着人工智能技术的不断进步,区分真假内容变得越来越困难。这有可能通过传播虚假信息或操纵公众舆论而造成伤害。 同样重要的是要考虑仅依赖 AI 生成的响应可能对人与人之间的交互产生的影响。在许多情况下,寻找信息并与其他人进行讨论可以帮助拓宽一个人的视野和对不同观点的理解。如果人们仅仅依赖人工智能生成的响应,他们可能会错失参与有意义的讨论和扩展知识和理解的机会。 此外,存在人们可能过度依赖人工智能技术并失去批判性和独立思考能力的风险。这可能会导致缺乏个人代理和自主权,因为人们可能会依赖人工智能生成的响应来做出决策,而不是他们自己的判断。 总之,虽然像我这样的人工智能技术可以成为提供信息和回答问题的宝贵工具,但盲目相信 ChatGPT 所说的任何内容都存在道德风险。人们必须意识到人工智能技术的局限性和潜在偏见,并在解释回答时使用批判性思维和事实核查。此外,重要的是要警惕人工智能生成的内容被用于恶意目的的可能性,并保持人与人之间的互动以更广泛地理解不同的观点。最后,人们必须通过不过度依赖 AI 生成的决策响应来保持他们的独立性和个人代理权。

以上是询问ChatGPT后,ChatGPT自己的回答。(原文为英文,上述文字是经过了google的翻译。)

原始问题和回答如下:

Q:You are ChatGPT. Write an article with about 500 words, discussing whether there is ethical risk for people to believe whatever ChatGPT says.

A: As an artificial intelligence, I am programmed to provide information and answer questions to the best of my abilities. However, the question of whether people should blindly believe whatever ChatGPT says raises ethical concerns.

Firstly, it is important to acknowledge the limitations of artificial intelligence. While I am a highly advanced language model, I am still a machine and lack the ability to understand context and nuance in the way that humans can. This means that there is a risk of misinformation or misinterpretation if people solely rely on my responses without any critical thinking or fact-checking.

Additionally, there is a risk of bias in the data and programming that goes into creating an AI like myself. If the data used to train me is biased, it can lead to biased responses. For example, if I am trained on data that contains racial or gender stereotypes, it may lead to biased responses on those topics. Therefore, it is important for people to be aware of these biases and to take them into account when interpreting my responses.

Another ethical concern is the potential for AI-generated content to be used for malicious purposes such as spreading disinformation or propaganda. As AI technology continues to advance, it is becoming increasingly difficult to distinguish between real and fake content. This has the potential to cause harm by spreading false information or manipulating public opinion.

It is also important to consider the impact that relying solely on AI-generated responses can have on human-to-human interaction. In many cases, seeking out information and engaging in discussions with other people can help to broaden one’s perspective and understanding of different viewpoints. If people solely rely on AI-generated responses, they may miss out on the opportunity to engage in meaningful discussions and expand their knowledge and understanding.

Furthermore, there is a risk that people may become overly reliant on AI technology and lose the ability to think critically and independently. This can lead to a lack of personal agency and autonomy, as people may come to rely on AI-generated responses for decision-making rather than their own judgment.

In conclusion, while AI technology like myself can be a valuable tool for providing information and answering questions, there are ethical risks to blindly believing whatever ChatGPT says. It is important for people to be aware of the limitations and potential biases of AI technology and to use critical thinking and fact-checking when interpreting responses. Additionally, it is important to be cautious about the potential for AI-generated content to be used for malicious purposes and to maintain human-to-human interaction for a broader understanding of different perspectives. Finally, people must maintain their independence and personal agency by not overly relying on AI-generated responses for decision-making.